Servers

Servers

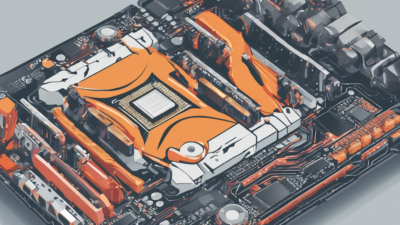

RTX 4090 Server Setup Step-by-Step Guide

RTX 4090 Server Setup Step-by-Step transforms consumer GPUs into enterprise AI powerhouses. This guide covers hardware builds, Ubuntu installation, CUDA setup, and model deployment. Achieve 500+ tokens/second for LLMs without cloud costs.

Read Article